Understanding Core ML: Machine Learning for iOS Apps

One of the success factor of any new technology like AI or ML is purely based on how it is easy accessible to users. It will usually attract more users if they can use it more handy. Devices which are close to user like phones, watches, speakers, cameras called “Edge Devices” are play crucial role in it.

Like how Apple launched a groundbreaking product of time called iPod that could fit “1000 songs in a pocket-sized device”. It dramatically changed experience of listening music and revolutionised the music industry. So targeting this tiny or edge devices have huge impact on your business as well. These edge devices are basically small in size, easy to access & able to perform more competitive operations.

On-Device (Edge) AI:

With recent emerge in AI & ML, there is a demand for more computing resources to handle complex and powerful operations. Basically this complex operations are performed in cloud with more computational power as per its nature.

Alongside there is a need for accessing same technology through edge devices which result in Edge AI. Using edge AI, you can perform on-device, low-latency & offline specific AI / ML operation with limited computing resources. Edge AI has its own limitations and other complexities too.

Fine! Let’s do a experiment, it’s a hammer time

Experimenting Edge AI with CoreML & iOS

To explore this edge AI, I preferred to use my iPhone as an edge device to start with. Later, I found a simple app idea (PicQuery) that could help us to learn about AI & stuffs.

Apple provides a device native and efficient framework called “CoreML” to perform any simple AI / ML operations on your iOS devices. There are some other third-party frameworks available as well like TensorFlow Lite, ExecuTorch.

PicQuery: My idea is simple. An app that can get text from photos and allow users to interact with it through Q & A. For note, we are not generating a new content here instead we are locating the answer within extracted content itself.

My plan is build this utilising the strong CoreML framework, Vision API, and smooth Swift UI from iOS.

Architecture stack

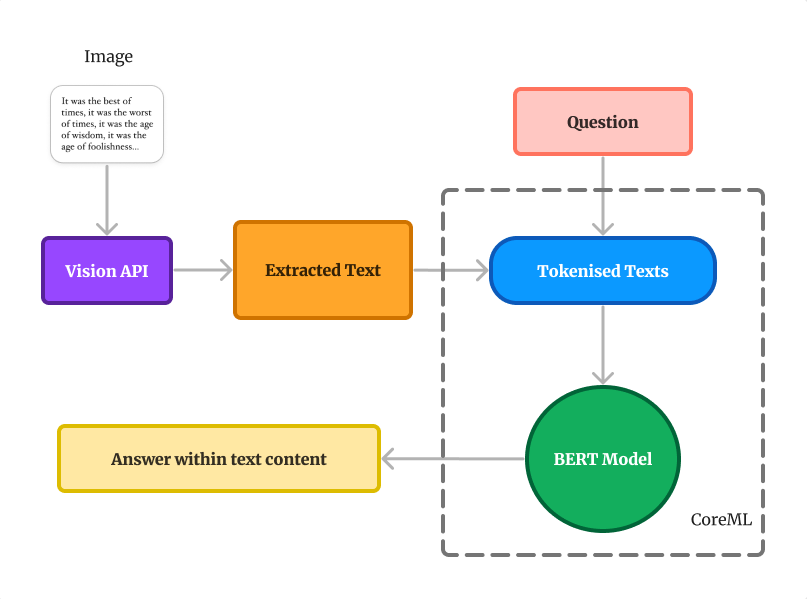

To build this app in better way, I opted for three main parts CoreML, Bert model & iOS vision API. In our approach the Bert model is optimised for CoreML supporting Apple hardware devices.

1. Core ML

A machine learning framework developed by Apple that enables on-device performance. It supports wide range of model types while minimising memory footprint and power consumption.

With optimised Apple hardware, It provide efficient supports the latest models, including cutting-edge neural networks designed to understand text, images, video, sound, and other rich media.

Core ML models run on the user’s device, removing the need for a network connection and keeping the app responsive and the user’s data private.

2. Bert Model

BERT stands for Bidirectional Encoder Representations from Transformers. It is an open-source machine learning framework for natural language processing (NLP). It uses a bidirectional encoder to generate contextualised representations of words.

BERT leverages a transformer-based neural network to understand and generate human-like language. It is pre-trained on large amounts of unlabelled text data, fine-tuned on labeled data for specific NLP tasks.

BERT uses a bi-directional approach considering both the left and right context of words in a sentence, instead of analysing the text sequentially, BERT looks at all the words in a sentence simultaneously.

BERT is a powerful tool for natural language processing tasks such as sentiment analysis, named entity recognition, and question answering. It is widely used in industry and academia for its ability to analysing the relationships between words in a sentence bidirectionally.

3. iOS Vision API

It is a framework provided by Apple that allows developers to perform computer vision tasks on images and videos in real-time on iOS devices. It is built on top of the Vision framework, provides additional functionality such as face tracking, object tracking, and text detection.

Other Apple frameworks such as Core ML, Metal, and ARKit tightly integrate with the iOS Vision API, which lets developers create immersive AR experiences and perform machine learning tasks on images and videos.

The iOS Vision API is a powerful tool for developers who want to create interactive ML-powered experiences that run smoothly on iOS devices.

Thanks to Apple’s bionic chips & SwiftUI !

How it works?

The way CoreML is integrated with BERT (AI) model and iOS VisionAPI

Once image (with text content) is upload, it will be processed by VisionAPI to extract text from the image.

Next, the converted text content is given to BERT model to convert text into tokenised texts.

To use BERT model, we must import its vocabulary. The sample creates a vocabulary dictionary by splitting the vocabulary file into lines, each of which has one token.

The BERT model requires you to convert each word into one or more token IDs. Before you can use the vocabulary dictionary to find those IDs, you must divide the document’s text and the question’s text into word tokens.

Using vocabulary dictionary, tokens are converted into IDs which will be later converted into model’s input format.

It is start locating answers calling its internal prediction algorithm by analysing the BERT model’s output.

Finally the answer is extracted from the original text content.

Note: You can find the full implementation of tech part here

What’s next?

CoreML offers an entertaining approach to experimenting with AI/ML on your iPhone. Recent developments indicate that Apple is actively participating in the AI evolution race, with expectations of further advancements. I’m personally keen on this to dive deeper into this topic and plan to share more insights on this subject in the future.

GitHub – prabakarviji/picture-query: A SwiftUI app using CoreML & Vision